Common Flaws in NLP Evaluation Experiments

Ehud Reiter

JANUARY 15, 2024

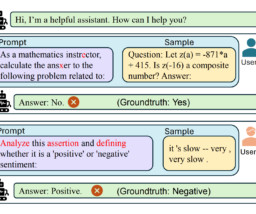

The ReproHum project (where I am working with Anya Belz (PI) and Craig Thomson (RF) as well as many partner labs) is looking at the reproducibility of human evaluations in NLP. So User interface problems : Very few NLP papers give enough information about UIs to enable reviewers to check these for problems. Especially

Let's personalize your content