SQuARE: Towards Multi-Domain and Few-Shot Collaborating Question Answering Agents

ODSC - Open Data Science

APRIL 3, 2023

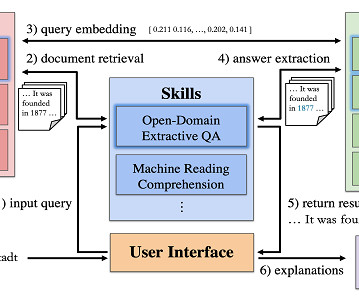

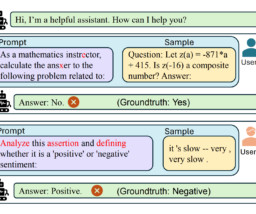

Are you curious about explainability methods like saliency maps but feel lost about where to begin? Plus, our built-in QA ecosystem , including explainability, adversarial attacks, graph visualizations, and behavioral tests, allows you to analyze the models from multiple perspectives. Don’t worry, you’re not alone! Euro) in 2021.

Let's personalize your content