7 Amazing NLP Hack Sessions to Watch out for at DataHack Summit 2019

Analytics Vidhya

OCTOBER 10, 2019

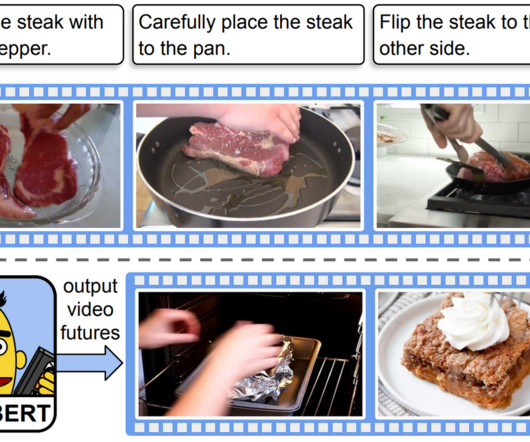

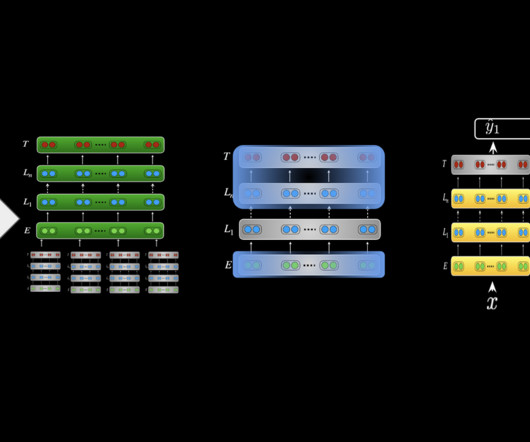

The post 7 Amazing NLP Hack Sessions to Watch out for at DataHack Summit 2019 appeared first on Analytics Vidhya. Picture a world where: Machines are able to have human-level conversations with us Computers understand the context of the conversation without having to be.

Let's personalize your content