From Rulesets to Transformers: A Journey Through the Evolution of SOTA in NLP

Mlearning.ai

APRIL 8, 2023

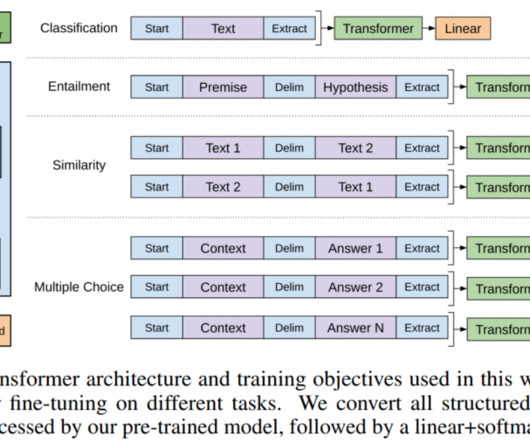

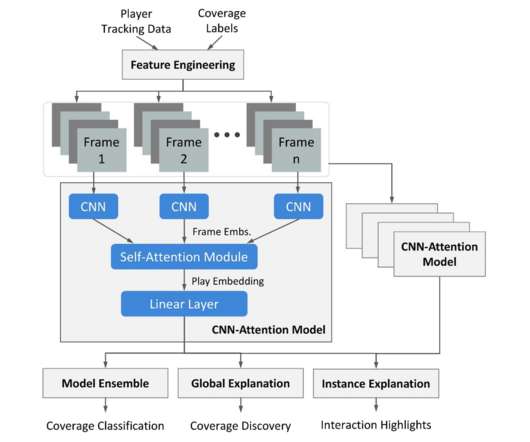

Charting the evolution of SOTA (State-of-the-art) techniques in NLP (Natural Language Processing) over the years, highlighting the key algorithms, influential figures, and groundbreaking papers that have shaped the field. Evolution of NLP Models To understand the full impact of the above evolutionary process.

Let's personalize your content