Peer Review Has Improved My Papers

Ehud Reiter

OCTOBER 10, 2023

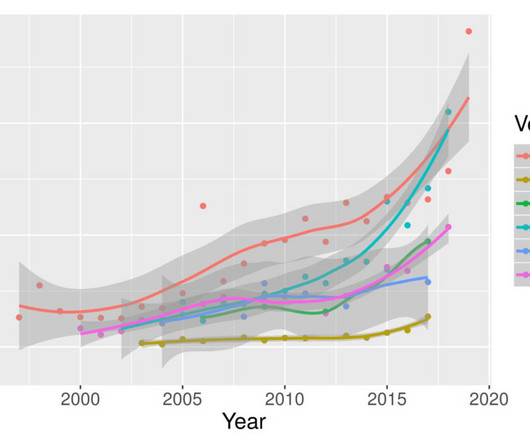

BLEU survey: better presentation of results Another example is my 2018 paper which presented a structured survey of the validity of BLEU; this was published in Computational Linguistics journal. In short, by insisting that we do a proper evaluation, the reviewers massively improved our paper.

Let's personalize your content