NLP Rise with Transformer Models | A Comprehensive Analysis of T5, BERT, and GPT

Unite.AI

NOVEMBER 8, 2023

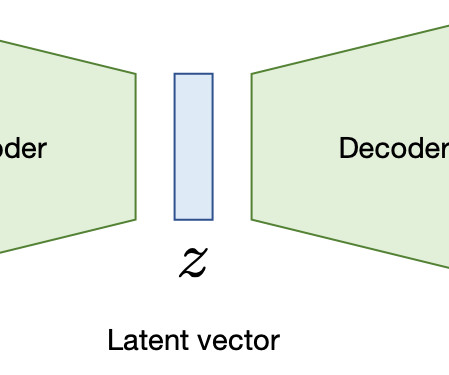

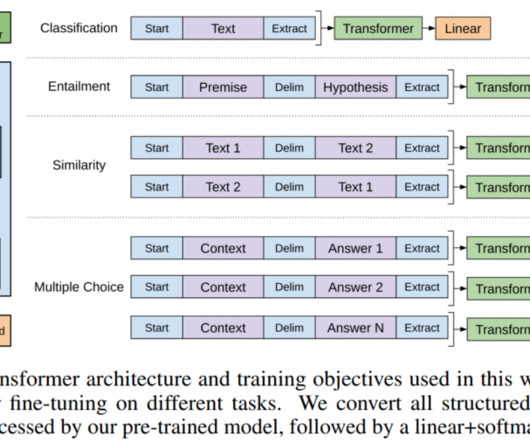

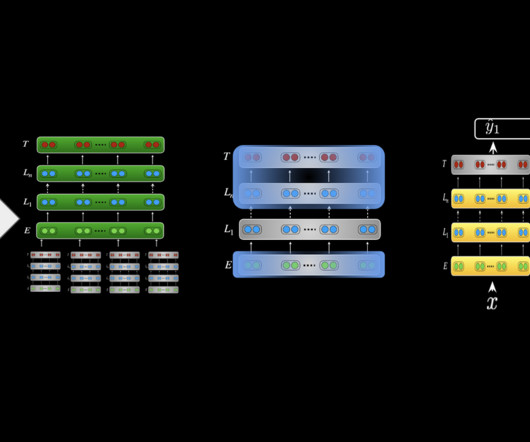

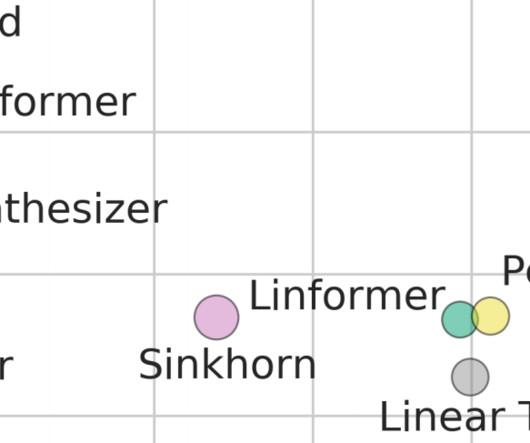

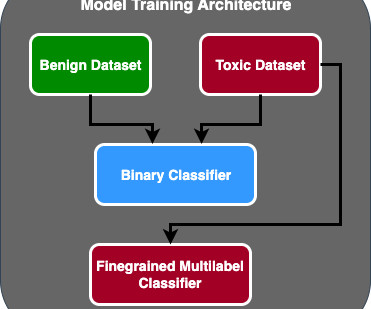

Recurrent Neural Networks (RNNs) became the cornerstone for these applications due to their ability to handle sequential data by maintaining a form of memory. Functionality : Each encoder layer has self-attention mechanisms and feed-forward neural networks. However, RNNs were not without limitations.

Let's personalize your content