Understanding Transformers: A Deep Dive into NLP’s Core Technology

Analytics Vidhya

APRIL 16, 2024

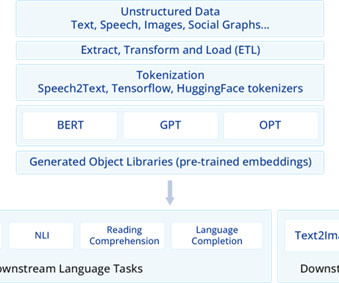

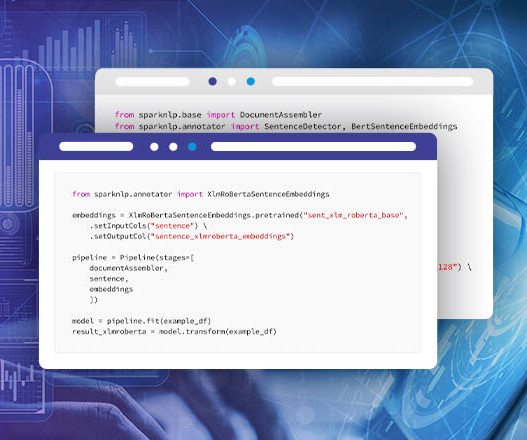

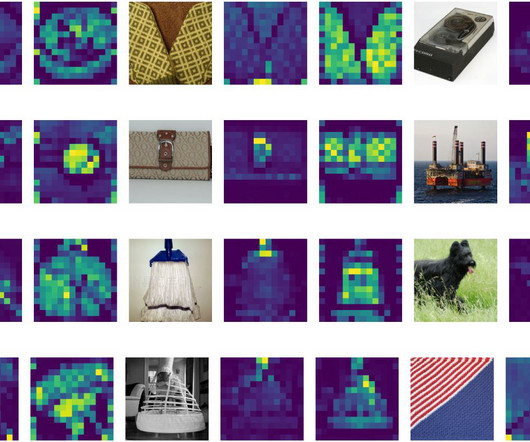

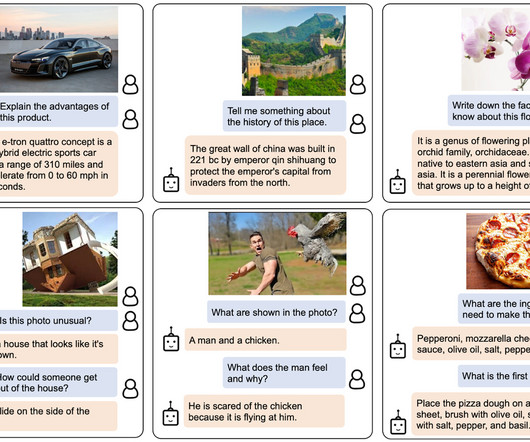

Introduction Welcome into the world of Transformers, the deep learning model that has transformed Natural Language Processing (NLP) since its debut in 2017. These linguistic marvels, armed with self-attention mechanisms, revolutionize how machines understand language, from translating texts to analyzing sentiments.

Let's personalize your content