Truveta LLM: FirstLarge Language Model for Electronic Health Records

Towards AI

APRIL 12, 2023

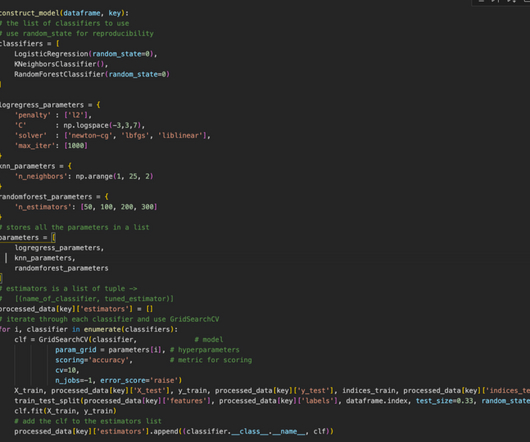

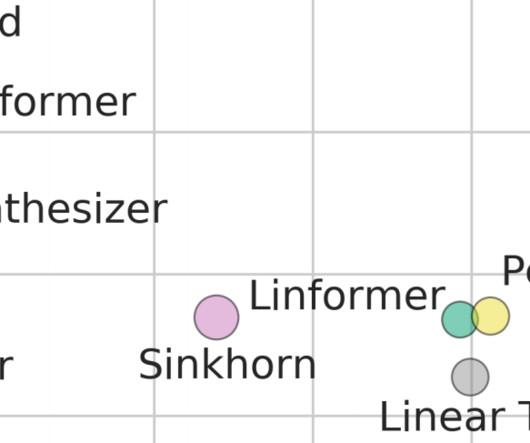

All of these companies were founded between 2013–2016 in various parts of the world. Soon to be followed by large general language models like BERT (Bidirectional Encoder Representations from Transformers).

Let's personalize your content