The State of Transfer Learning in NLP

Sebastian Ruder

AUGUST 18, 2019

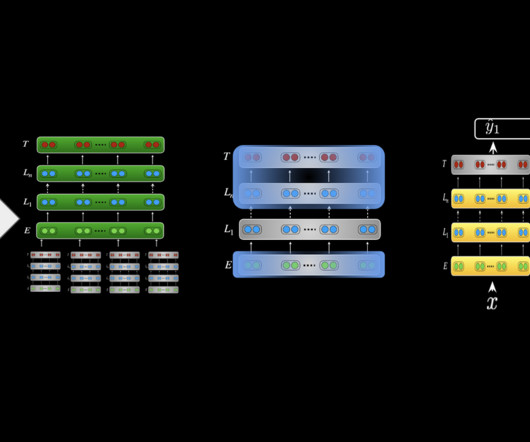

Later approaches then scaled these representations to sentences and documents ( Le and Mikolov, 2014 ; Conneau et al., LM pretraining Many successful pretraining approaches are based on variants of language modelling (LM). Early approaches such as word2vec ( Mikolov et al., 2017 ; Peters et al.,

Let's personalize your content