Major trends in NLP: a review of 20 years of ACL research

NLP People

JULY 24, 2019

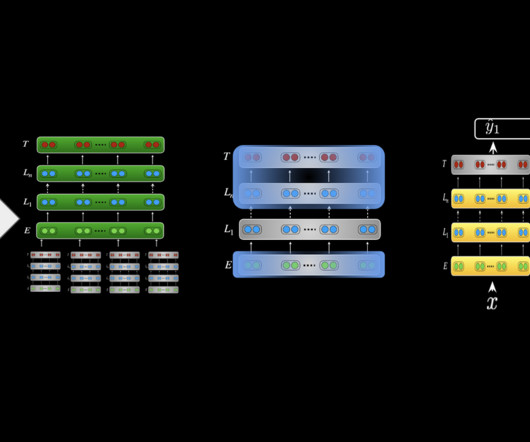

The 57th Annual Meeting of the Association for Computational Linguistics (ACL 2019) is starting this week in Florence, Italy. The universal linguistic principle behind word embeddings is distributional similarity: a word can be characterized by the contexts in which it occurs. Sequence to sequence learning with neural networks.

Let's personalize your content