Making Sense of the Mess: LLMs Role in Unstructured Data Extraction

Unite.AI

MAY 29, 2024

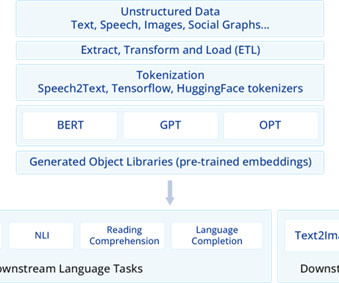

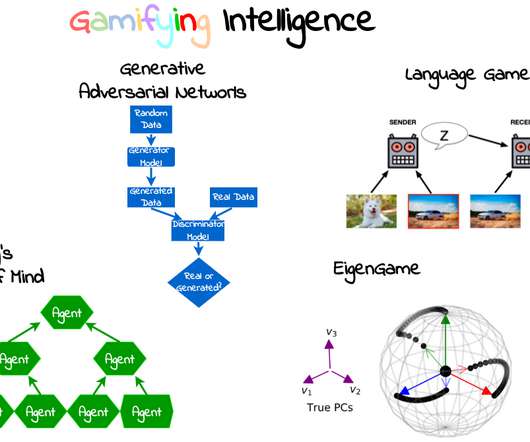

Source: A pipeline on Generative AI This figure of a generative AI pipeline illustrates the applicability of models such as BERT, GPT, and OPT in data extraction. LLMs like GPT, BERT, and OPT have harnessed transformers technology. These LLMs can perform various NLP operations, including data extraction.

Let's personalize your content